Verified Assured Learning for Unmanned Embedded Systems (VALUES)

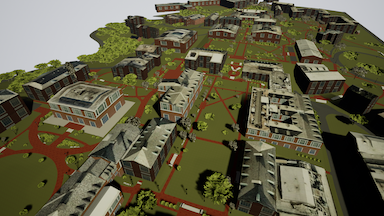

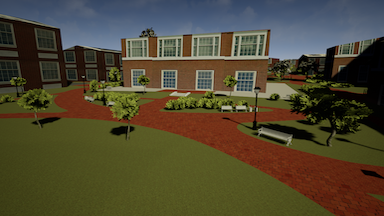

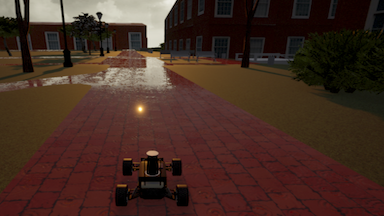

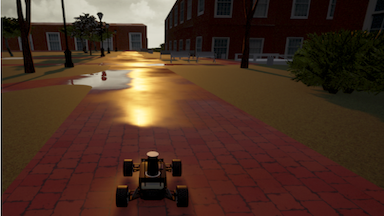

This project aims at development of a research framework for autonomous navigation using a 1/5 scale unmanned ground vehicle, with the long-term goal of combining learning-based control with physics-based models. To this end, a high fidelity simulation environment (using CARLA) as well as the necessary architecture for fast integration with robot hardware was developed. The motion planning architecture uses a hybrid system formalism, where the system switches between different actions. The actions encode closed-loop controlled motions, parametrized by given desired velocity and lateral offset with respect to a given route. A simple greedy action enumeration policy is used to select the optimal action based on a user-defined reward function, subject to collision constraints.

Surgical Robotic System for Autonomous Minimally Invasive Orthopaedic Surgery

Developed a dexterous robotic system for minimally invasive autonomous debridement of osteolytic bone lesions in confined spaces. The proposed system is distinguished from the state-of-the-art orthopaedics systems by combining a rigid-link robot with a flexible continuum robot that enhances reach in difficult-to-access spaces often encountered in surgery. The continuum robot is equipped with flexible debriding instruments and fiber Bragg grating sensors. Surgeon plans on the patient’s preoperative computed tomography and the robotic system performs the task autonomously under the surgeon’s supervision. An optimization-based controller generates control commands on the fly to execute the task while satisfying physical and safety constraints.

-

“A Dexterous Robotic System for Autonomous Debridement of Osteolytic Bone Lesions in Confined Spaces: Human Cadaver Studies”. accepted in IEEE Transactions on Robotics, 2020

S. Sefati, R. Hegeman, I. Iordachita, R. Taylor, M. Armand. -

“A Surgical Robotic System for Treatment of Pelvic Osteolysis Using an FBG-Equipped Continuum Manipulator and Flexible Instruments”. IEEE/ASME Transactions on Mechatronics, 2020

S. Sefati, R. Hegeman, F. Alambeigi, I. Iordachita, P. Kazanzides, H. Khanuja, R. Taylor, M. Armand. -

“FBG-Based Control of a Continuum Manipulator Interacting With Obstacles”. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018

S. Sefati, R. Murphy, F. Alambeigi, M. Pozin, I. Iordachita, R. Taylor, M. Armand.

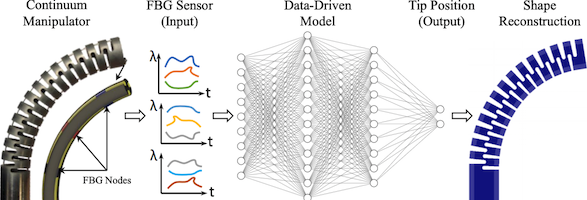

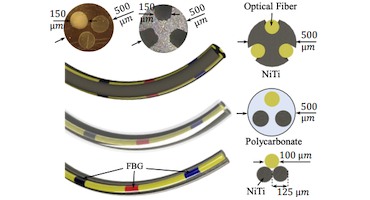

Data-Driven Shape Sensing of Continuum Robots

This work proposes a data-driven learning-based approach for shape sensing and distal-end position pstimation of continuum robots in constrained environments using Fiber Bragg Grating (FBG) sensors. The proposed approach uses only the sensory data from an unmodeled uncalibrated sensor embedded in the CM to estimate the shape and DPE. A deep neural network was used to increase the shape sensing accuracy in presence of disturbance compared to mechanics-based model-dependent approaches. A variety of flexible small-size (< 0.5 mm outside diamter) sensors with different stiffness, bending capabilites, and sensing resolution were designed and embedded into the continuum robot.

-

“Data-Driven Shape Sensing of a Surgical Continuum Manipulator Using an Uncalibrated Fiber Bragg Grating Sensor”. IEEE Sensors Journal, 2020

S. Sefati, C. Gao, I. Iordachita, R. Taylor, M. Armand. -

“High-Resolution Optical Fiber Shape Sensing of Continuum Robots: A Comparative Study”. International Conference on Robotics and Automation (ICRA), 2020

F. Monet (co-first author), S. Sefati (co-first author), P. Lorre, A. Poiffaut, S. Kadoury, M. Armand, I. Iordachita, R. Kashyap. -

“FBG-Based Position Estimation of Highly Deformable Continuum Manipulators: Model-Dependent vs. Data-Driven Approaches”. International Symposium on Medical Robotics, 2019

S. Sefati, R. Hegeman, F. Alambeigi, I. Iordachita, M. Armand.

Learning-Based Collision Detection in Continuum Robots

Conventional continuum robot collision detection algorithms rely on a combination of exact continuum robot constrained kinematics model, geometrical assumptions such as constant curvature behavior, a priori knowledge of the environmental constraint geometry, and/or additional sensors to scan the environment or sense contacts. We proposed a data-driven machine learning approach using only the available sensory information, without requiring any prior geometrical assumptions, model of the continuum robot or the surrounding environment. The proposed algorithm was implemented and evaluated on a non-constant curvature continuum robot, equipped with Fiber Bragg Grating (FBG) optical sensors for shape sensing purposes. Results demonstrate successful detection of collisions (using only fiber optic, not vision) in constrained environments with soft and hard obstacles with unknown stiffness and location.

-

“Learning to Detect Collisions for Continuum Manipulators without a Prior Model”. International Conference on Medical Image Computing and Computer-Assisted Intervention, 2019

S. Sefati, S. Sefati, I. Iordachita, R. Taylor, M. Armand. -

“Data-driven collision detection for manipulator arms”. US Patent App. 16/801,272

S. Sefati, I. Iordachita, M. Armand.